Jesse Seidule recently posted a video in which he challenges the Dall-E 2 AI to replicate some existing game art. With a lot of help from Jesse, the AI made a recognizable attempt, but Jesse noted many ways in which the human art exceeded the AI art in quality. The issues that Jesse raised support his conclusion that AI art currently can’t replace human art. I have found similar issues with the AI-generated art that I’ve posted on Club Gnome.

However, having been a computer science professor, I can tell you that the researchers working on this will be able to address these issues using straightforward approaches over the next 3-10 years–though not all the issues.

I’ll outline a few issues that I’m confident researchers will solve with straightforward approaches. Then I will compare the processes that humans and AIs use, and I will conclude with a few thoughts about where (some!) human artists will retain a durable competitive advantage.

Issues amenable to straightforward approaches

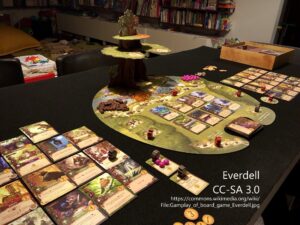

One issue that Jesse repeatedly mentioned is lack of detail. It’s certainly not the only issue (see below!), but it’s something that is plain when you compare the incredible level of brushstroke detail in Everdell to what AIs can do right now. This issue is largely due to limitations of computational capacity. Each spatial unit of the generated image is processed thousands or millions of times as the AI works. The servers affordable for free demos like the ones Jesse used are not capable of segmenting the image small enough to generate a highly-detailed image in a reasonable amount of time. The approach for solving this is straightforward: lease more servers or more powerful servers. Or, just wait a few years for computational power to increase thanks to Moore’s Law.

Another issue that Jesse raised was the poor representation of form and juxtaposition, such as a janky astronaut foot or how a Viking’s legs seemed to float over the shoreline. This issue is illustrated by the image below, generated by giving the prompt “eldritch horror mushroom kittens” to Midjourney. These are possibly the result of inadequate training data, as well as limitations in computational capacity that keep the AI from doing a billion passes over this spatial unit instead of millions of times. But, mainly, the AI simply hasn’t yet seen enough body parts in enough situations to draw them correctly all the time. And, honestly, how often have you seen an Eldritch Horror mushroom kitten yourself?–the images below do embody a certain kind of serendipity, though it’s not entirely clear that it could exist in our universe’s physical laws. The solution is to continue training it with more data, giving the AI exposure to more human expertise. There might always be the need for an artist to touch up some minor mistakes, but the amount of work will become smaller and eventually negligible.

One more issue that Jesse raised was poor choice of aesthetics and composition. This is a tough one! It’s illustrated by the picture at the head of this article, generated by giving the prompt “phalanx of gnomes hiding under trees in preparation to ambush goblins” to Midjourney. Essentially, the AI needs to get a feel for what looks “good” in terms of positioning of characters relative to the camera, framing, color balance, etc. A reasonable approach for this would be to use post-process filtering with machine learning. To wit, the researchers have configured the AI to generate many versions of the image that the user requested. Users’ choice of which version to scale up implicitly tells the AI which of the versions is “best.” It can learn from this feedback, increasing the likelihood of generating more images with this combination of aesthetics and composition in the future. In particular, a heuristic can be back-propagated through the image-generation algorithm so that the AI is less likely to develop a version in the first place with poor composition or similar issues. This, too, is a matter of time and investment.

In short, straightforward approaches can mitigate or eliminate several issues associated with technical execution, including lack of detail, oddball shapes, and representational choices such as framing.

Comparing human and AI processes

I want to recapitulate an artistic process that I think reveals where AI is going and where it falls short.

Human artists generally look at other art to train their aesthetics and get ideas for the elements of a specific piece. They then start with a few rough sketches to explore concepts, and they experiment to see what feels good. This creates opportunities for serendipity–for example, orienting the character’s face just so makes him look cute, and this triggers an idea about lighting, and so on. The artist then makes sequential passes over the piece, establishing the composition, the mood, the forms, the lighting, the detail, etc.

An AI is likewise trained by looking at other art, developing both an aesthetic sense and a means of mapping from human text to elements. It makes a rough sketch and uses this to explore possible directions for the piece. The algorithm judges this piece and uses those judgments to update its choices of how to draw this piece. It then uses these new choices to generate a piece that has more detail and better detail than the previous iteration. There is some opportunity for serendipity, but each iteration is a small change, which creates the possibility of falling into a “locally optimal” output rather than finding a better globally optimal output (i.e., it comes up with something that looks like the human’s desired output, but it’s not really the best possible output).

The similarities in these processes are striking. You can see how addition of time and computational power will allow the AI to improve on its aesthetic sense, the range of pictures that it can correctly draw, and especially the amount of detail possible.

Human competitive advantages

A key strength of the human (not the only strength, but one that will be exceptionally hard to emulate) is the genius involved in those sketches at the start of the human process. When Jesse generated the Blood Rage and Everdell images, he typed in a description of what artists had already established. The artists were the generators of those ideas, and they generated those ideas way back when they were messing around with sketches that probably looked half-assed compared to the final product. Yet I don’t need to see those sketches to know that they were great sketches in the sense that those sketches triggered the serendipity resulting in the final pieces that we know and love today.

My point is this: The genius of an artist is as much in the conception of the piece as it is in the execution, and I don’t know of a straightforward approach for teaching this genius to the AI. AIs will have their own genius, when presented with suitably detailed prompts. But it will be a different genius, and AIs will manifest a kind of serendipity resembling rather than replacing that of humans (as we already see quite well).

My takeaway is this: Aspiring artists worrying about their future should invest as much in their ability to imagine as they do in the technical expertise required to execute the work. Both are important. But the former is more of a durable competitive advantage than the latter. And the artists who excel in their imagination will (I predict) be the ones who survive and thrive in the era of AI-generated art.

Bonus: Roundup of links to great content about game art

- Jasper at Pine Island Games discusses the value and cost of hiring an illustrator.

- Jamey at Stonemaier Games lists 200 artists and graphic designers that he enjoys.

- Enrico Tartarotti presents some art that highlights the creative serendipity of AIs.

- Andrew Bosley discusses the game art industries (video vs tabletop) and shows his process.

- Jason at Grey Gnome Games talks about the role of public domain art in his games.

(Feel free to post links in the comments below to other content that I should add to these links)

Notes to Self

I’ve only just gained the rough ability to illustrate single characters. It’s nothing compared to the work of Jenny Nystrom or Arthur Rackham — two illustrators from the early 1900’s.

- Continue experimenting with AIs as a tool for…

- Exploring serendipity

- Eventually executing some aspects of a piece

- Watch for a chance to try out Dall-E 2 myself

- Continue investing heavily in the ability to imagine, sketch and explore new concepts